What is Kubernetes?

Kubernetes is an open-source platform for managing containerized applications and services. It is a container orchestration engine. It was built to make the process of deploying, scaling, and maintaining containers easily. It is hosted by the Cloud Native Computing Foundation (CNCF). It decreases the chances of downtime by offering many solutions to scale and monitor the applications.

The origin of the work kubernetes comes from Greek, meaning helmsman or pilot.

Evolution of deployment

- Traditional Deployment: Organizations used to utilize physical servers to run applications that did not support the allocation of resources to each application. This means that applications on the same server could interfere with each other’s performance and could cause uneven resource usage. They came up with a solution to host each application on its own server. However, this was expensive and not efficient.

- Virtualized Deployment: Virtualization helped developers to run multiple applications on one server by separating those applications onto multiple Virtual Machines (VMs). The developer can easily allocate specific resources to each VM depending on the usage of the application inside. This enabled extra layer of security as application on the same server can no longer talk to each other. Virtualization improved resource utilization and scalability, reduced hardware costs, and transformed physical resources into cluster of deposable virtual machines. Each VM runs with its own OS a virtualized hardware.

- Container Deployment: Containers are similar to VM, but they share the same OS thus are more lightweight. A container has its own filesystem and usage of CPU/RAM.

How Kubernetes works?

The good thing about Kubernetes is that it has only one source of truth. The developer designs how they want the cluster to be in a git repository, then Kubernetes will handle the deployment automatically. The good thing about this is that it is secure against any intruders’ attempts to cause harm to the cluster. Kubernetes will not care about intruders who delete/modify pods and nodes manually, it consistently watches the desired state in the git repository, and if it detects any change inside the cluster, it immediately goes back to the desired state.

What Kubernetes provides?

- Load Balancing: Kubernetes can distribute the high traffic onto many nodes resulting in better experience for the user.

- Automatic rollback: Kubernetes makes it easy to deploy an old version of the cluster or a new one and apply it to the whole cluster.

- Self-healing: when a container fails, Kubernetes restart it automatically to fix the problem, if the problem is not fixed with a restart, Kubernetes will replace the container with a new one and kill the failed one.

Kubernetes components

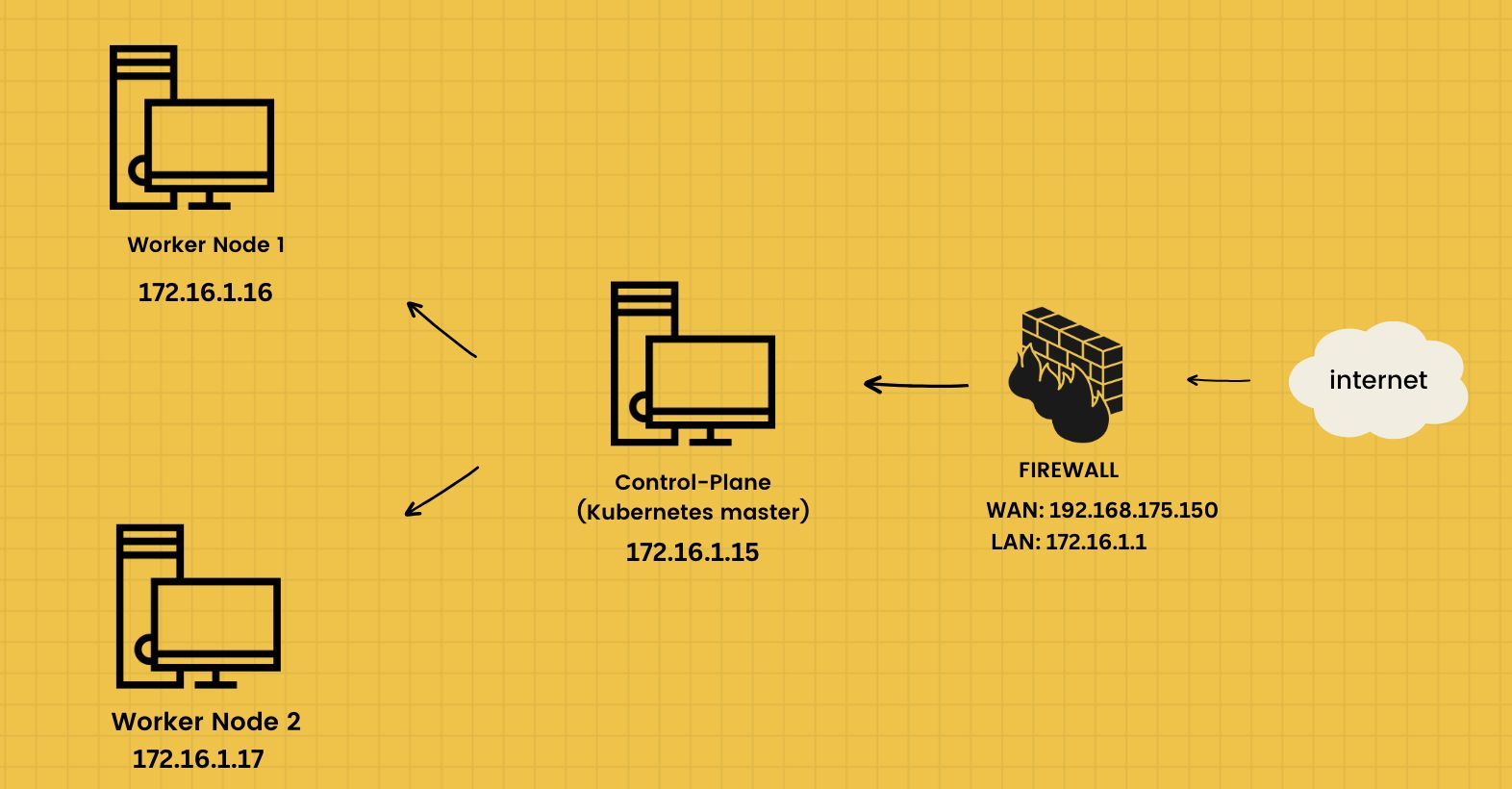

A Kubernetes cluster consists of a master node (control-plane) which controls the worker nodes that would host the containers eventually.

Control-Plane

The control plane consists of the components that run the Kubernetes cluster. They are responsible of scheduling, detecting, and responding to cluster events like forcing the actual state of the Kubernetes to be exactly like the desired state.

Control-plane components:

- Kube-apiserver: This component is responsible for exposing the Kubernetes API.

- etcd: Consistent key value store that stores all the Kubernetes cluster data.

- Kube-scheduler: It watches for newly created Pods that are not assigned to node, then it assigns it to the best suitable node.

- kube-controller-manager: It runs the controller processes.

Worker Node

Worker nodes are responsible of executing the applications workloads. They are managed by the control-plane. The control-plane decides which node to assign an application to based on the availability of all worker nodes. A worker node represents physical or virtual hardware that runs containerized applications.

Worker node components:

- kubelet: is an agent that makes sure that containers are running in a Pod. The kubelet is being given instructions on how Pods should behave, and it makes sure that they are working as they are supposed to.

- Kube-porxy: carries network rules that allow or reject communications from inside and outside the cluster.

- Container runtime: empowers Kubernetes to run containers effectively.

- DNS: All Kubernetes clusters should have cluster DNS. It acts as a DNS server for the nodes/pods.

- Network Plugins: Implement the container network interface CNI specifications. They give pods IP addresses and enabling them to communicate with each other.

Creating Kubernetes cluster

To create a cluster, I designed this simple network.

*I will be using Ubuntu 22.04 for Master and Worker servers*

- Disable swap on all the servers.

Swapoff -a

sed -i '/swap/d' /etc/fstab

- Add the overlay and br_netfilterkernel modules to the/etc/modulesload.d/crio.confcat >>/etc/modules-load.d/crio.conf<<EOF

> overlay

> br_netfilter

> EOF

modprobe overlay

modprobe br_netfilter - Provide networking capabilities to containers

cat >>/etc/sysctl.d/kubernetes.conf<<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

sysctl --system

- Setup a firewall

ufw enable

- Open some ports for control planesudo ufw allow 6443/tcp

sudo ufw allow 2379:2380/tcp

sudo ufw allow 10250/tcp

sudo ufw allow 10259/tcp

sudo ufw allow 10257/tcp - Open some ports for Calico CNIsudo ufw allow 179/tcp

sudo ufw allow 4789/udp

sudo ufw allow 4789/tcp

sudo ufw allow 2379/tcp

- Open some ports for worker nodessudo ufw allow 10250/tcp

sudo ufw allow 30000:32767/tcp

sudo ufw allow 179/tcp

sudo ufw allow 4789/udp

sudo ufw allow 4789/tcp

sudo ufw allow 2379/tcp

- Install Cri-O container runtime

OS=xUbuntu_22.04

CRIO_VERSION=1.28

echo "deb https://download.opensuse.org/repositories/devel:/kubic:/libcontainers:/stable/$OS/ /"|sudo tee /etc/apt/sources.list.d/devel:kubic:libcontainers:stable.list

echo "deb http://download.opensuse.org/repositories/devel:/kubic:/libcontainers:/stable:/cri-o:/$CRIO_VERSION/$OS/ /"|sudo tee /etc/apt/sources.list.d/devel:kubic:libcontainers:stable:cri-o:$CRIO_VERSION.list

curl -L https://download.opensuse.org/repositories/devel:kubic:libcontainers:stable:cri-o:$CRIO_VERSION/$OS/Release.key | sudo apt-key --keyring /etc/apt/trusted.gpg.d/libcontainers.gpg add -

curl -L https://download.opensuse.org/repositories/devel:/kubic:/libcontainers:/stable/$OS/Release.key | sudo apt-key --keyring /etc/apt/trusted.gpg.d/libcontainers.gpg add –

sudo apt-get update

sudo apt-get install -qq -y cri-o cri-o-runc cri-tools

systemctl daemon-reload

systemctl enable --now crio

- Install Kubernetes

curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg \ | apt-key add –

apt-add-repository "deb http://apt.kubernetes.io/ kubernetes-xenial main"

apt install -qq -y kubeadm=1.26.0-00 kubelet=1.26.0-00 kubectl=1.28.0-00

- Initialize Kubernetes Cluster on the Control Plane

systemctl enable kubelet

kubeadm config images pull

kubeadm init --pod-network-cidr=192.168.0.0/16 \ --cri-socket unix:///var/run/crio/crio.sock

export KUBECONFIG=/etc/kubernetes/admin.conf

- Deploy Calico networkkubectl apply -f

https://raw.githubusercontent.com/projectcalico/calico/v3.25.0/manifests/calico.yaml

- Join the cluster from worker nodes

*The joining command will be printed after initializing the cluster on the control plane*

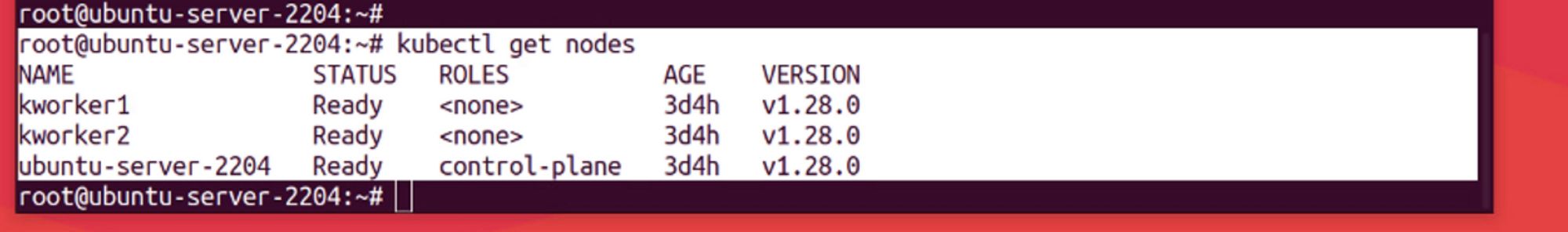

- Check the nodes

On the control plane, check if the nodes joined successfully

kubectl get nodes

kubectl cluster-info

How does Kubernetes handle containers?

Each container is designed to be repeatable. This is because it includes all necessary dependencies that it needs to run. Containers separate applications from the host infrastructure. This separation simplifies the process of deploying applications across different infrastructure. In a Kubernetes cluster, every node operates the containers that make up the Pods assigned to it. The containers in each Pod are grouped together to run simultaneously on the same node.

Container images

A container image is like a complete package for running applications. It carries everything that the application needs: the actual code, the environment it needs to run, and all necessary libraries. Containers are designed to be stateless and unchangeable. This means that altering the code of a container is not a good practice. The best way is to create a new image with the changes included. Then, using the new image to replace the existing container.

Container runtimes

Container runtimes are essential components that let Kubernetes execute containers efficiently. Their responsibility is to handle the lifecycle management, start, and stop containers running on the Kubernetes cluster. Containers that follow the Kubernetes CRI (Container Runtime Interface) standards, such as containerd, CRI-O are compatible with Kubernetes. Kubernetes allows the system to choose the default container runtime for a Pod automatically. However, Kubernetes provide a RuntimeClass to define a specific runtime for a Pod in case it is necessary to use more than one container runtime inside the same cluster.

Why Use Kubernetes?

Kubernetes is the fastest growing project in the history of Open-Source software. It became so reliable for the following key reasons:

- Portability: Kubernetes enables quicker and easier deployment procedures, improving the flexibility amongst various cloud service providers. Because of this, organizations can grow quickly without having to restructure their infrastructure.

- Scalability: Kubernetes allows quick scaling of operations due to its compatibility with a variety of environments like VMs, Public clouds, and bare metal.

- High Availability: Kubernetes integrates reliable storage options to serve stateful workloads, addressing both application and infrastructure resilience. The worker nodes can also be set up for replication, which increases system availability overall.

- Open-Source Nature: Since Kubernetes is open source, many tools can be implemented with Kubernetes for better experience.

How does Kubernetes make DevOps more efficient?

Kubernetes is a powerful tool that enhances DevOps efficiency in several ways:

- Speeds up development: Kubernetes speeds up how quickly applications are deployed by automatically handling the setup, adjustment, and management of containerized apps.

- Scalability and Availability: Kubernetes offers a feature where organizations can change the amount of resources they need right away. This feature is Horizontal Pod Autoscaler where users can add more pods to a replication controller, deployment, or replica set. Kubernetes consistently checks how much resources are being used at regular intervals. Whenever it finds that the app doesn’t have enough resources, it will create more pods to help handle extra work.

- Flexibility: Containerization, along with Kubernetes, enables users to utilize cloud setups like public, private, hybrid, or multi-cloud environments. Using Kubernetes minimizes the risk of losing performance when scaling up the environment.

The adoption of DevOps practices after Kubernetes led to big outcomes for organizations. Since DevOps become popular, increasing number of businesses have embraced this approach to enhance their deployments.

How does Kubernetes work with other DevOps tools?

Kubernetes has a rich ecosystem of DevOps tools that enhance its capabilities. Some of these tools include:

- Terraform: It’s a tool for infrastructure as a code, enabling users to define both cloud and on-prem resources in human-readable configuration files. These files can be version-controlled, reused, and shared. Terraform is capable of handling basic elements such as computing instances, storage, and networking.

- Kubespray: Blending Kubernetes and Ansible, Kubespray offers several advantages in deploying Kubernetes clusters like deployment flexibility, balance between flexibility and simplicity, and simplified deployment process.

- Lens: Lens is a popular tool that simplifies interactions with Kubernetes clusters for both development and operational purposes. It makes it easier for users to work on Kubernetes clusters without the complexities of command-line interface, offering straightforward graphical user interface (GUI). However, Lens is not just a GUI. It offers several more features. For example, it comes with built-in Helm, this makes installing Helm charts easy. Also, Lens provides visual insights into cluster activities and health, making it easier to monitor and understand the state of Kubernetes clusters.

- Helm: It’s a tool designed for automating the creation, packaging, configuration, and deployment of Kubernetes applications by combining all the necessary configuration files into a single reusable package. In the context of a microservice architecture, as the application expands, the number of microservices also increases, leading to complicate management.

- Prometheus: It offers visual insights for systems by extracting their data, assisting in assessing the health of the infrastructure.

- FluentD: It was created to address the challenges of collecting large-scale data logs. The primary function of FluentD is to streamline the process of data collection and consumption, enhancing the ability to understand and utilize the data effectively.

- Kubeadm: It is a tool utilized for constructing Kubernetes clusters. Its main role is to execute the essential steps required to establish a basic, functional cluster efficiently.

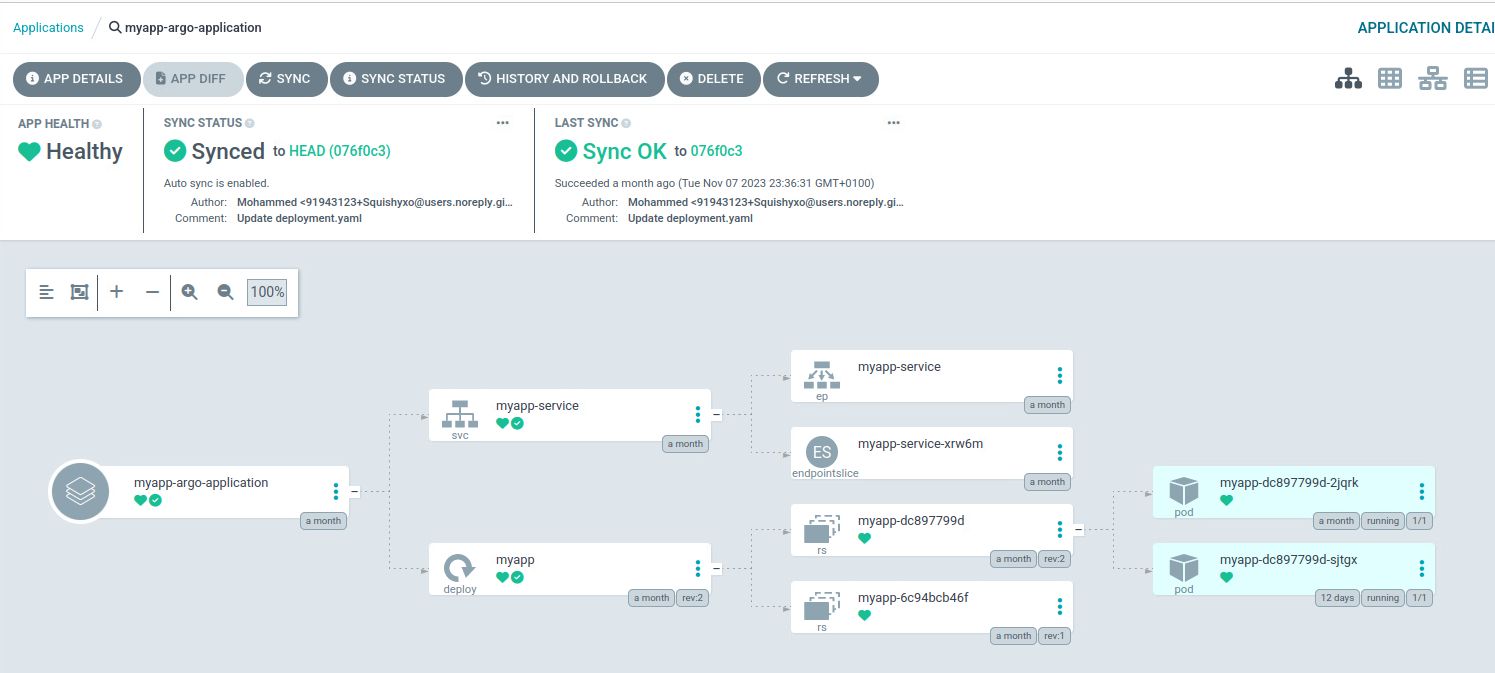

- ArgoCD: Argo CD is a declarative, continuous delivery tool specifically designed for Kubernetes. It functions using the GitOps principle, where a Git repository acts as the main source of truth for infrastructure and application configurations. Argo CD makes sure that the desired system state is automatically synchronized and maintained. This helps to align deployment processes with the repository's defined configurations.

ArgoCD

Let's examine ArgoCD in detail and see how to use it as a continuous delivery system with Kubernetes.

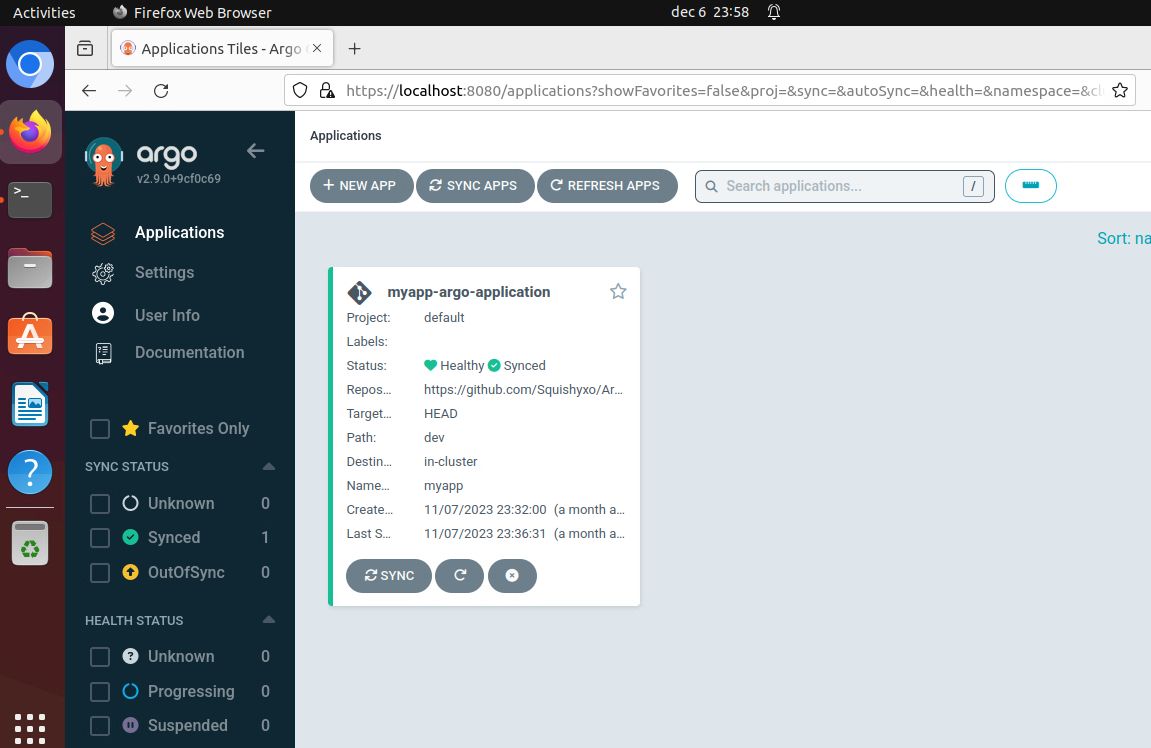

Create a GIT repositoryFirstly, I need to create a GIT repository that contains the declarative definitions of the Kubernetes resources I want to manage (e.g., YAML files for deployments, services, etc.). This repository is the "source of truth" for my applications.https://github.com/Squishyxo/ArgoCD.gitThis is a simple GIT repository that contains a deployment yaml file to deploy my own website, a service yaml file, and the ArgoCD yaml file that makes sure to consider that repository as the source of truth.

Install ArgoCD in my Kubernetes cluster

kubectl create namespace argocd

kubectl apply -n argocd -f https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml

Now, let's access the web UI by port forwarding ArgoCD that is running as a service:kubectl port-forward svc/argocd-server -n argocd 8080:443

The API server can then be accessed using https://localhost:8080.

When I git clone the GIT repository and apply the application.yaml file, I will immediately see that ArgoCD created a new project and it will continuously synchronize it to match the desired state set up in the GIT repository:

Now, ArgoCD will update these pods to match the number of replicas set up in the GIT repository. If someone changed the number of pods from kubectl, ArgoCD will immediately revert the changes to match the desired state as declared in the GIT repository.