This blog post dives deep into Multi-Layer Perceptrons (MLP), the building blocks of deep learning. Explore how they model complex data to drive AI innovations in fields like voice recognition and financial forecasting.

What is a Neuron?

In the context of neural networks, a neuron is a basic unit, similar to a tiny processing element in a computer. Imagine it as a small worker in your brain that receives input, processes it, and passes on the output. In a Multi-Layer Perceptron, each neuron receives signals from previous layers, processes these signals by performing simple calculations, and then sends the result to the next layer of neurons. This process is much like passing a message along in a game of telephone, where each player adds a little bit to the message before passing it on. Neurons work together in layers to handle complex tasks like recognizing faces or understanding spoken words.

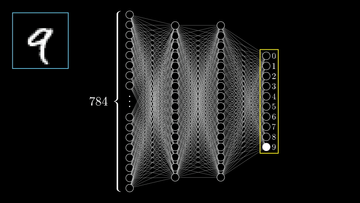

Structure of an MLP

A Multi-Layer Perceptron (MLP) is like a complex network made up of layers stacked one after another. Each layer is filled with neurons, those tiny workers that process and pass on information. The first layer, called the input layer, receives the initial data, like images or sounds. The last layer, known as the output layer, gives the final result, like identifying an object in a picture. Between the input and output, there are one or more hidden layers where most of the processing happens. These layers work together to transform the input data step-by-step into a form that the output layer can use to make a decision or prediction. This layered structure allows MLPs to learn from data and make smart decisions based on what they've learned.

The Forward Pass: Data Flow through Layers.

In a Multi-Layer Perceptron (MLP), the forward pass is like data going through a series of gates. It starts at the input layer, where each neuron looks at a piece of the data and does a simple math problem. Think of this as the first gate, where the data gets a quick check. Then, the data moves to the next layer, or the next gate, where it gets checked again but in a slightly different way. Each layer works like a gate, making the data a bit clearer and more useful every time it passes through one. By the time the data reaches the output layer, the last gate, it's fully processed and ready to give us an answer or decision. The whole journey is straightforward, with data moving from one gate to the next without going back.

Activation Functions.

Activation functions in a Multi-Layer Perceptron (MLP) are like special rules that decide how a neuron should react to the information it receives. Without activation functions, an MLP would just perform simple, straightforward calculations, which might not be enough to solve more complex problems like recognizing images or understanding speech.

Think of activation functions as filters at a playground slide. These filters decide how much of the incoming signals (kids wanting to slide) should actually go through. Some signals might trigger a strong reaction and send lots of data forward (like a big push that sends a kid sliding fast), while others might not do much at all (like a gentle push that only moves the kid a little).

By using these rules or filters, MLPs can handle information in more complex and nuanced ways, which is essential for dealing with the tricky, non-straightforward tasks we often ask them to perform. This ability to process information in non-linear, varied ways is what makes MLPs so powerful in the world of artificial intelligence.

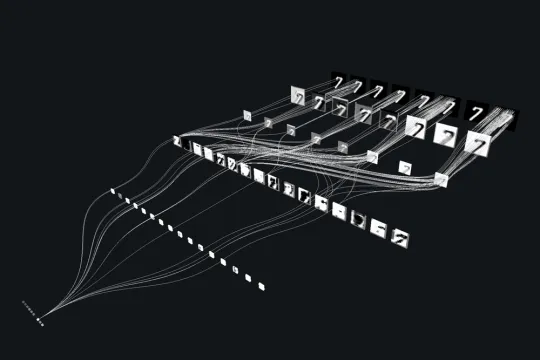

Backpropagation Algorithm: Learning from Errors.

The backpropagation algorithm is like a teacher who helps a Multi-Layer Perceptron (MLP) learn from its mistakes. When an MLP tries to make a prediction, such as guessing what's in a picture, it might not always get it right. The backpropagation algorithm checks the MLP's answer against the correct answer and then figures out where the MLP went wrong.

Think of it like going back over a path of footprints to see where you slipped. The algorithm starts from the end (the wrong answer) and moves backwards through the network, adjusting things slightly at each layer to correct the mistake. It tells each neuron in the MLP how to change its calculations to be more accurate next time. This process of moving backward and making adjustments helps the MLP improve, so it can make better guesses in the future. It's like learning through trial and error, constantly tweaking and improving based on feedback.

Conclusion

In conclusion, Multi-Layer Perceptrons (MLPs) are a fundamental tool in the world of deep learning, helping machines tackle complex tasks by mimicking the way our brains work. From understanding how MLPs function to seeing them in action with practical examples, we've explored the significant role they play in advancing artificial intelligence. Whether you're just starting out or looking to deepen your knowledge, the journey into neural networks is as exciting as it is rewarding. Happy exploring, and keep learning!